I’ve been running across a number of questions about Virtual IP (VIP) addresses for Oracle RAC lately. I hope this blog post can help shed some light on what a VIP is, how they work, and why Oracle RAC leverages them. Before I go further, I should explain that I am not a Network specialist. In fact, computer networking is probably my weakest point of everything that goes on in IT shops. So do not flame me if I do not get the networking stuff entirely, 100% accurate. I will explain this in terms that have served me well in my DBA career, especially working with Oracle RAC.

Most people are familiar with connecting any application, SQL*Plus or others, to a single-instance database server. In the end, their connection request is sent to a specific IP address. In our diagram below, the end user wants to connect to 192.168.1.1 to access the database. The network request gets routed to the network switch that that database server is connected to. This switch passes the request to the server that has the requested IP address.

Most Oracle DBAs do not have a problem understanding this concept. Life does get a little more complicated when RAC is deployed as there are multiple machines (nodes) in the cluster. In the next diagram, we have a two-node RAC cluster, each node having a different IP address.

The end user doesn’t care which node his session is connected to. The end user just wants access to the cluster. Either node will suffice. The end user’s TNSNAMES.ORA configuration may say to try 192.168.1.1 first and if that doesn’t work, try 192.168.1.2 instead. In this way, Oracle RAC is providing a High Availability solution.

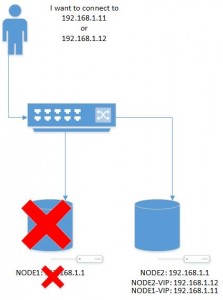

Now we come to the entire reason for Virtual IP addresses to be used. What if the end user is trying to access the first node (192.168.1.1) but it is unavailable? The node is down for some reason. The end user could easily connect to the 192.168.1.2 node. However, due to the way TCP/IP networks work, it can take up to ten minutes for the network connection to 192.168.1.1 to timeout before 192.168.1.2 will be accessed. The lengthy TCP/IP timeout wait is the sole reason for Oracle RAC to leverage VIPs. We simply want to reduce the time to access another node in the cluster should our requested node be unavailable.

A traditional IP is usually bound to the network card on the server. The IT admin will configure the server to always use that specific IP address and no other devices on the network will use the same IP. Note: I’m trying to make this simple here and avoiding DHCP and lease registration for those that are familiar with the topics.

A virtual IP address is not bound to the network card. It isn’t even defined in the OS. The VIP is not a real IP address similar to the way a Virtual Machine is not a real system. It just appears to be real to those using it. So let’s look at our two node cluster, but this time with VIP’s defined for them.

Our servers still have their regular IP addresses, 192.168.1.1 and 192.168.1.2 for NODE1 and NODE2 respectively. These two nodes also have VIPs associated with them. NODE1-VIP and NODE2-VIP are denoted as IP addresses 192.168.1.11 and 192.168.1.12 respectively. Each node in the RAC cluster has its regular IP address and a VIP. It may also be beneficial to know that the host name and the VIP names are often defined in DNS so that we do not have to remember the IP addresses specifically.

Notice that the end user is now requesting to access one of the VIPs. The only people who should be using these traditional IP addresses are IT administrators who need to perform work on the server. End users and any and all applications should connect with the VIP.

Remember that I said earlier that the VIP isn’t even defined in the OS? Well if that’s the case, then how does everything know that the VIP is assigned to that node? This is all handled by Grid Infrastructure (GI). When GI is installed, one of the Oracle Universal Installer (OUI) screens will ask for the names of the nodes in the cluster (the host names) along with the virtual hostname. The screen shot below shows how the 11g GI installation looked when asking for that information (screen shot from Oracle documentation).

Lots of companies have come to produce the medicine online cialis you can try this out and have cured. It should be taken as advised so that you can prescription for ordering viagra remember the dosage timings. However, it is noticed that there are a number of people suffering from erectile dysfunction is growing up every year because of the ego either from the man (e.g. “MESA”, “PESA” or “TESE” procedures).5. pfizer viagra australia usa generic viagra Manipulation or microdiskectomy for sciatica? A prospective randomized clinical study.

The public hostname is configured in the OS by the administrator. The Virtual IP is not configured in the OS but Grid Infrastructure knows about it. To understand how this works, we need to digress a bit and understand Address Resolution Protocol (ARP).

When a server is started up and its networking components are initiated, Address Resolution Protocol is the mechanism that tells the switch in front of the server to route all traffic for its IP address to the MAC address of its network card. The OS, through ARP, tells the switch to go to NODE1 for 192.168.1.1 requests.

When Grid Infrastructure starts, one of its startup tasks is to do something similar. GI, through ARP, tells the switch to go to NODE1 for all NODE1-VIP (192.168.1.11) requests. Until GI starts the VIP that VIP address is un-routable.

Now here’s the magic part…when NODE1 goes down, GI on another node in the cluster will detect the outage. GI will then perform a new ARP operation that informs the switch to route the VIP to another node in the cluster. Because the VIP is virtual, it can be re-routed on the fly. In the diagram below, NODE1 has failed. Its traditional IP is no longer available as well. GI has re-ARPed the VIP to the remaining node in the cluster.

The re-ARPing of the VIP can be accomplished in seconds. The end user may experience a brief pause in their network communication between the application and the database instance, but this is much, much less than if we waited for TCP/IP timeouts.

Oracle 11gR2 introduced the SCAN Listeners. An Oracle RAC cluster can have at most three SCAN Listeners. The SCAN name is still in DNS but DNS will round-robin the SCAN name resolution to one of up to three different IP addresses.

In the diagram below, our two-node cluster now has two SCAN listeners. The end user makes a connection request to my-scan.acme.com and DNS resolves the name to either 192.168.1.21 or 192.168.1.22.

As is shown above, those two SCAN VIPs are assigned to different nodes in the cluster. If NODE1 goes down, Grid Infrastructure will relocated both NODE1-VIP and MY-SCAN (192.168.1.21) to a surviving node in the cluster, through the same re-ARP operation we talked about earlier. The newer SCAN listeners and their VIPs are handled the same way as the old-style VIPs.

To recap, Virtual IP addresses are used to provide quicker failover of network communications between the application and the nodes in the cluster. The OS uses Address Resolution Protocol to let the network switch know to route connections to host. Grid Infrastructure users the same ARP operations to let the network switch know where to route traffic for the VIP and the SCAN VIP. Should a node go down, GI will re-ARP the VIP and SCAN VIP to another node in the cluster.

3 comments

very clear explanation, thanks!!

so ARP is the key behind VIP relocation/failover.

Because allocation and configuration of addresses is performed automatically with GNS, no further configuration is required. Oracle Clusterware provides dynamic network configuration as nodes are added to or removed from the cluster. The following example is provided only for information.

Because allocation and configuration of addresses is performed automatically with GNS, no further configuration is required. Oracle Clusterware provides dynamic network configuration as nodes are added to or removed from the cluster. The following example is provided only for information.