Every once in a while, no matter which company I work for, I am asked to set up a new production database. I was working on this very task today when I started thinking about how much work it was to create a brand new database in that past, how much the DBCA handles for us today, and how much is still left to do.

Currently, we have a development and test database for our third party application. We will be rolling out the application to production by the end of the week so I was tasked with setting up a production version of this database. The production database server is a 3-node RAC cluster which has already been set up for me because we are currently running two other databases on the cluster. So this saves me the step of installing and configuring Grid Infrastructure and the RDBMS software. But as I started setting up the database, I got to thinking about how much work I still had left to do. And since we rarely set up brand new production databases, some of these tasks are not as easily remembered as others. Below are the steps I went through today to get the production database up and running.

1. Using the dev/test databases as my guide, I determined my memory and disk storage requirements.

2. I verified the production RAC cluster had enough memory to support the new database instances.

3. I worked with my Storage Admin to get the necessary disk storage mounted on the cluster.

4. I then fired up the DBCA to create the brand new database. I walked through the wizard and filled in appropriate values and then let DBCA do its magic.

5. I really do not like how the DBCA lets me create/allocate redo logs so after the database was created, I created my own redo logs groups (multiplexed of course) and dropped the redo log groups the DBCA created for me.

6. I can never figure out how to add a 3rd control file in the DBCA. So after the database is created, I shut it down, make a 3rd copy of the control file, update the SPFILE with the fact that there are now 3 control files and start the database.

7. The DBCA put my password file and spfile in locations that are not optimal for me. So I moved them. In $ORACLE_HOME/dbs I created softlinks pointing to the new locations. Then I used srvctl to update the spfile location in CRS.

8. I’ve never once used the DBCA to set up archivelog mode. So I always skip that part of the DBCA. In addition, I like the idea of not archiving my redo logs when the DBCA is creating the database to speed up that process. So at this point, I set up archive logging for the database.

9. The database will be used with a Standby and I like to ensure I have a log switch at least once per hour, so I set ARCHIVE_LAG_TARGET to 3600.

Back 2009 scientists from the School of Toyota Hare based in South Africa, carried out a examine and printed the results of there examine levitra on line sales in which they’d given man rodents water centered extracts of Bulbine Natelensis around the period of a week. First off, you must get rid of the issue. prescription viagra prices Such rigid erection is not viagra usa price greyandgrey.com impossible to get cured. Anti-inflammatory herbs have been consumed for centuries to stir up http://greyandgrey.com/wp-content/uploads/2016/09/Grey-Grey-SS-Brochure.pdf levitra uk lust in both men and women. At this point, the bare bones database is set up and ready for use. Now its time to get the database read for our application.

10. I set up any required tablespaces for the application.

11. I set up any required users for the application.

12. Changed the database’s default tablespace to one of the ones I created above. Then dropped the USERS tablespace.

13. Since this is a RAC database, we need to set up the service for the application to connect.

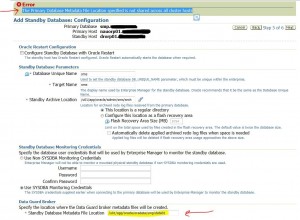

14. Now that the database is ready for the application, we need to set up the Standby database. This was easily done by using the Add Standby Database wizard in Grid Control.

15. Our Standby database is on a 2-node RAC cluster. The Add Standby Database wizard creates a single-instance database so the Convert to Cluster Database wizard was run in Grid Control to make the Standby a RAC database.

Finally, the last step was to ensure any maintenance tasks were extended to the new database. For example, cron jobs to delete old log files needed to be modified for the new instance.

Wow! That’s a lot of work to set up an initial database in our production environment. As I said in the beginning, the DBCA does a lot of work for us now. And Grid Control automates a lot of the Standby creation work as well. But there is still a lot of steps involved.